前几天朋友需要大量各种关键词的图片,于是帮她:smiley: 写了一个关于图片的多线程爬虫,爬的是百度图片,同时换了接口,也适用于搜狗图片,360 图片;除此之外,还加了一个简单的去重。

脚本用 python3 写的,用的都是一些基础模块,没有使用框架之类的,反正我也不会,这些模块也已经满足要求。使用过程中某些参数需要自己配置,比如关键词,线程数量等,如果你什么都不会,那不推荐你使用。

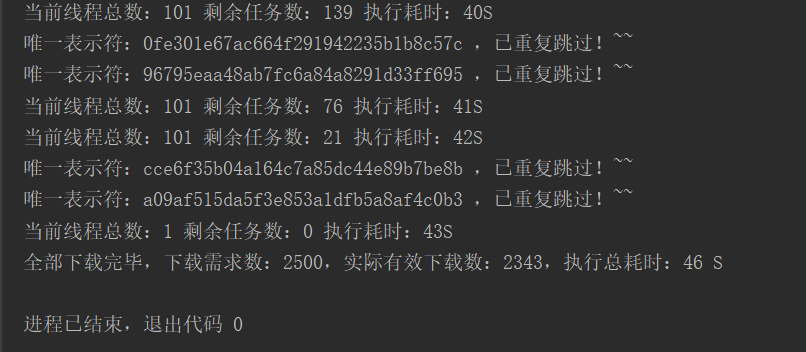

爬虫效果:

百度图片代码:

- import requests, queue, os, urllib3, time, threading

- from hashlib import md5

-

- urllib3.disable_warnings() # 忽略ssl报错

-

-

- def getManyPages(keyword, pages):

- params = {

- 'tn': 'resultjson_com',

- 'ipn': 'rj',

- 'ct': 201326592,

- 'is': '',

- 'fp': 'result',

- 'queryWord': keyword,

- 'cl': 2,

- 'lm': -1,

- 'ie': 'utf-8',

- 'oe': 'utf-8',

- 'adpicid': '',

- 'st': -1,

- 'z': '',

- 'ic': 0,

- 'word': keyword,

- 's': '',

- 'se': '',

- 'tab': '',

- 'width': '',

- 'height': '',

- 'face': 0,

- 'istype': 2,

- 'qc': '',

- 'nc': 1,

- 'fr': '',

- 'pn': pages, # 起始数

- 'rn': 50, # 返回数

- 'gsm': '1e',

- '1532325785686': '' # 根据下图修改,这只是一个时间戳

- }

- url = 'https://image.baidu.com/search/acjson' # 百度图片网址

- try:

- res = requests.get(url, params=params, timeout=(5, 20), verify=False)

- return res.json().get('data')

- except:

- print('百度图片json数据获取失败')

- return []

-

-

- def Run():

- global Dnum

- while not Plist.empty():

- data2 = Plist.get()

- picpath = FilePath + data2['filename']

- try:

- res = requests.get(data2['url'], timeout=(5, 30), stream=True)

- if res.status_code == requests.codes.ok and int(res.headers['Content-Length']) > 0:

- destr = (str(res.headers['Content-Length']) + data2['url']).encode(encoding='UTF-8')

- m = md5(destr).hexdigest()

- # --------------------------

- if m in Mlist: # 重复重复跳过!~~

- print('唯一表示符:' + m + ' ,已重复跳过!~~')

- continue

- else:

- Mlist.append(m)

- # --------------------------

- with open(picpath, 'wb') as file:

- file.write(res.content)

- except requests.RequestException as err:

- Plist.put(data2)

- print('下载失败,稍后重试!' + err)

- continue

-

-

- if __name__ == '__main__':

- # ------------------------------------------

- KeyWords = '白色轿车' # 关键词

- Num = 2 # 爬取数量,50的倍数

- Tnum = 100 # 下载线程数

- # ------------------------------------------

- Name = 0 # 顺序命名

- Tlist = list() # 子线程列表

- Mlist = list() # 图片唯一表示符

- Plist = queue.Queue(Num * 100) # 图片下载地址队列

- FilePath = './baidu/' + KeyWords + '/' # 保存路径,使用相对路径

- Start = time.time()

- if not os.path.exists(FilePath):

- os.makedirs(FilePath)

-

- for xx in range(Num):

- dataList = getManyPages(KeyWords, xx * 50)

- for x in dataList:

- if 'thumbURL' in x:

- filename = 'baidu_' + KeyWords + '_' + str(Name) + '.jpg'

- data = {'filename': filename, 'url': x['thumbURL']}

- Plist.put(data)

- Name += 1

- print('当前已获取图片数:' + str(Plist.qsize()) + ' ' + str(xx))

-

- print('实际获取数量可能略少,实际获取数量:' + str(Plist.qsize()))

- print('开始启动下载线程')

- for x in range(Tnum):

- t = threading.Thread(target=Run)

- t.setDaemon(False)

- t.start()

- Tlist.append(t)

- print('所有线程启动完成,全力下载')

- while not Plist.empty() and threading.activeCount() > 1:

- time.sleep(1)

- print('当前线程总数:' + str(threading.activeCount()) + ' 剩余任务数:' + str(Plist.qsize()) + ' 执行耗时:' + str(

- round(time.time() - Start)) + 'S')

- for x in range(len(Tlist)):

- if not Tlist[x].isAlive() and not Plist.empty():

- print('其中一个线程死掉,马上重启')

- Tlist.pop(x)

- t = threading.Thread(target=Run)

- t.setDaemon(False)

- t.start()

- Tlist.append(t)

- print('全部下载完毕,下载需求数:' + str(Num * 50) + ',实际有效下载数:' + str(len(Mlist)) + ',执行总耗时:' + str(

- round(time.time() - Start)) + ' S')

搜狗图片代码:

- import requests, queue, os, urllib3, time, threading

- from hashlib import md5

-

- urllib3.disable_warnings() # 忽略ssl报错

-

-

- def getManyPages(keyword, pages):

- url = 'https://pic.sogou.com/pics?query=' + keyword + '&start=' + str(pages) + '&reqType=ajax' # 搜狗图片接口

- try:

- res = requests.get(url, timeout=(5, 20), verify=False)

- return res.json().get('items')

- except:

- print('json数据获取失败')

- return []

-

-

- def Run():

- global Dnum

- while not Plist.empty():

- data2 = Plist.get()

- picpath = FilePath + data2['filename']

- try:

- res = requests.get(data2['url'], timeout=(5, 30), stream=True)

- if res.status_code == requests.codes.ok and int(res.headers['Content-Length']) > 0:

- destr = (str(res.headers['Content-Length']) + data2['url']).encode(encoding='UTF-8')

- m = md5(destr).hexdigest()

- # --------------------------

- if m in Mlist: # 重复重复跳过!~~

- print('唯一表示符:' + m + ' ,已重复跳过!~~')

- continue

- else:

- Mlist.append(m)

- # --------------------------

- with open(picpath, 'wb') as file:

- file.write(res.content)

- except requests.RequestException as err:

- Plist.put(data2)

- print('下载失败,稍后重试!' + err)

- continue

-

-

- if __name__ == '__main__':

- # ------------------------------------------

- KeyWords = '轿车' # 关键词

- Num = 50 # 爬取数量,48的倍数

- Tnum = 100 # 下载线程数

- # ------------------------------------------

- Name = 0 # 顺序命名

- Tlist = list() # 子线程列表

- Mlist = list() # 图片唯一表示符

- Plist = queue.Queue(Num * 100) # 图片下载地址队列

- FilePath = './sougou/' + KeyWords + '/' # 保存路径,使用相对路径

- Start = time.time()

- if not os.path.exists(FilePath):

- os.makedirs(FilePath)

-

- for xx in range(Num):

- dataList = getManyPages(KeyWords, xx * 48)

- for x in dataList:

- if 'thumbUrl' in x:

- filename = 'sougou_' + KeyWords + '_' + str(Name) + '.jpg'

- data = {'filename': filename, 'url': x['thumbUrl']}

- Plist.put(data)

- Name += 1

- print('当前已获取图片数:' + str(Plist.qsize()) + ' ' + str(xx))

-

- print('实际获取数量可能略少,实际获取数量:' + str(Plist.qsize()))

- print('开始启动下载线程')

- for x in range(Tnum):

- t = threading.Thread(target=Run)

- t.setDaemon(False)

- t.start()

- Tlist.append(t)

- print('所有线程启动完成,全力下载')

- while not Plist.empty() and threading.activeCount() > 1:

- time.sleep(1)

- print('当前线程总数:' + str(threading.activeCount()) + ' 剩余任务数:' + str(Plist.qsize()) + ' 执行耗时:' + str(

- round(time.time() - Start)) + 'S')

- for x in range(len(Tlist)):

- if not Tlist[x].isAlive() and not Plist.empty():

- print('其中一个线程死掉,马上重启')

- Tlist.pop(x)

- t = threading.Thread(target=Run)

- t.setDaemon(False)

- t.start()

- Tlist.append(t)

- time.sleep(3)

- print('全部下载完毕,下载需求数:' + str(Num * 50) + ',实际有效下载数:' + str(len(Mlist)) + ',执行总耗时:' + str(

- round(time.time() - Start)) + ' S')

360 图片代码:

- import requests, queue, os, urllib3, time, threading

- from hashlib import md5

-

- urllib3.disable_warnings() # 忽略ssl报错

-

-

- def getManyPages(keyword, pages):

- url = 'http://image.so.com/j?q=' + keyword + '&pn=50&sn=' + str(pages) # 百度图片网址,pn代表返回数量

- try:

- res = requests.get(url, timeout=(5, 20), verify=False)

- return res.json().get('list')

- except:

- print('百度图片json数据获取失败')

- return []

-

-

- def Run():

- global Dnum

- while not Plist.empty():

- data2 = Plist.get()

- picpath = FilePath + data2['filename']

- try:

- res = requests.get(data2['url'], timeout=(5, 30), stream=True)

- if res.status_code == requests.codes.ok and int(res.headers['Content-Length']) > 0:

- destr = (str(res.headers['Content-Length']) + data2['url']).encode(encoding='UTF-8')

- m = md5(destr).hexdigest()

- # --------------------------

- if m in Mlist: # 重复重复跳过!~~

- print('唯一表示符:' + m + ' ,已重复跳过!~~')

- continue

- else:

- Mlist.append(m)

- # --------------------------

- with open(picpath, 'wb') as file:

- file.write(res.content)

- except requests.RequestException as err:

- Plist.put(data2)

- print('下载失败,稍后重试!' + err)

- continue

-

-

- if __name__ == '__main__':

- # ------------------------------------------

- KeyWords = '黑色轿车' # 关键词

- Num = 40 # 爬取数量,50的倍数

- Tnum = 100 # 下载线程数

- # ------------------------------------------

- Name = 0 # 顺序命名

- Tlist = list() # 子线程列表

- Mlist = list() # 图片唯一表示符

- Plist = queue.Queue(Num * 100) # 图片下载地址队列

- FilePath = './360/' + KeyWords + '/' # 保存路径,使用相对路径

- Start = time.time()

- if not os.path.exists(FilePath):

- os.makedirs(FilePath)

-

- for xx in range(Num):

- dataList = getManyPages(KeyWords, xx * 50)

- for x in dataList:

- if 'thumb_bak' in x:

- filename = '360_' + KeyWords + '_' + str(Name) + '.jpg'

- data = {'filename': filename, 'url': x['thumb_bak']}

- Plist.put(data)

- Name += 1

- print('当前已获取图片数:' + str(Plist.qsize()) + ' ' + str(xx))

-

- print('实际获取数量可能略少,实际获取数量:' + str(Plist.qsize()))

- print('开始启动下载线程')

- for x in range(Tnum):

- t = threading.Thread(target=Run)

- t.setDaemon(False)

- t.start()

- Tlist.append(t)

- print('所有线程启动完成,全力下载')

- while not Plist.empty() and threading.activeCount() > 1:

- time.sleep(1)

- print('当前线程总数:' + str(threading.activeCount()) + ' 剩余任务数:' + str(Plist.qsize()) + ' 执行耗时:' + str(

- round(time.time() - Start)) + 'S')

- for x in range(len(Tlist)):

- if not Tlist[x].isAlive() and not Plist.empty():

- print('其中一个线程死掉,马上重启')

- Tlist.pop(x)

- t = threading.Thread(target=Run)

- t.setDaemon(False)

- t.start()

- Tlist.append(t)

- time.sleep(3)

- print('全部下载完毕,下载需求数:' + str(Num * 50) + ',实际有效下载数:' + str(len(Mlist)) + ',执行总耗时:' + str(

- round(time.time() - Start)) + ' S')